Many of the most valuable inventions result from sensing and connecting a range of both new advances and existing technologies – even if these originate in distant and previously unassociated domains. Iprova conducts a large amount of research in this field, some of which forms the basis of our Invention Studio invention platform. Latent spaces are just one area that Iprova has studied and assessed.

So, what is a latent space? A latent space codes a class of data into a multidimensional space where each ‘point’ in the space can generate a different synthetic data sample and similar points generate similar data samples. There is an increasing familiarity with this idea, based upon work that has been publicised using Generative Adversarial Networks (GANs). For example, NVIDIA’s StyleGAN3 generates high quality images of synthetic human faces. Recent work has explored the use of latent spaces for creativity, for example to design logos or generate music or art. We thought that it would be interesting to explore whether we can encode invention ‘concepts’ in the same way and then explore the resulting space of possible concepts.

Analogy in concept-like latent spaces has been examined using word embeddings, with relationships such as:

A is to B as X is to Y. What is B?

For example: King is to B as Man is to Woman. Answer B = Queen.

Using word embeddings such as Glove (one of several algorithms which processes text to find contextual relationships between words and then encodes this into an embedding vector) we can get something like these results. We would like to implement this kind of analogy operation but, unlike word embeddings which only encode existing words to their latent space location, we want to be able to generate completely new synthetic concepts from the resulting points in the latent space.

Latent Spaces for Text

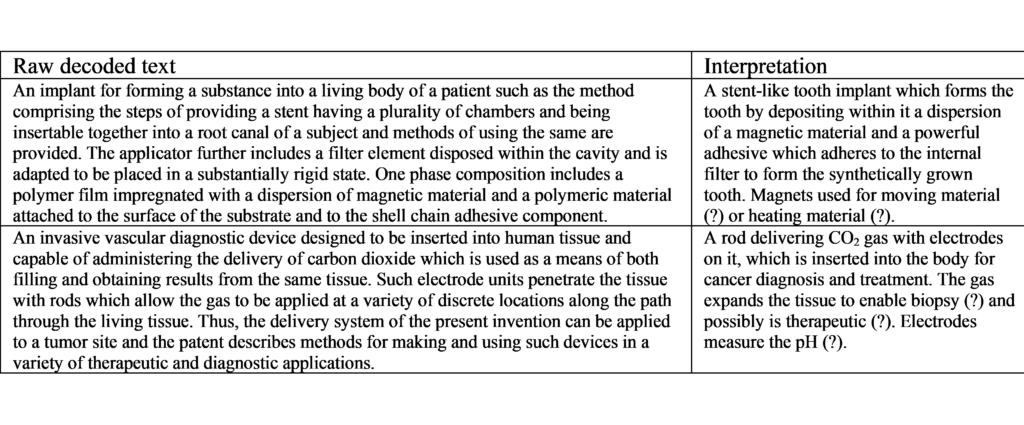

We can get our ‘concepts’ from the text of patent abstracts. However, we then need to generate sensible latent spaces for medium length text. Using GANs remains difficult, as we cannot simply pass gradients through the selection of text tokens. De-noising variational autoencoders appear to be the best alternative. As with GAN training, several ‘tricks’ need to be used to get good results and avoid problems such as ‘collapse’ of the latent space. In addition, auxiliary tasks such as predicting patent classification classes can be used to try to disentangle the ‘syntax’, (i.e. how the concept is expressed), from the ‘semantics’ (i.e., the underlying meaning of the concept).

To deal with long texts with multiple sentences, we implemented a hierarchical sentence and document denoising variational autoencoder. To ensure that the training was robust and comprehensive, 50,000 patent abstracts along with their IPC patent classes were initially used.

Results

Once the text latent space was successfully trained, three approaches were used to explore it for potentially novel patent concepts. In all cases, we only kept new concepts whose ‘position’ was sufficiently different to existing concepts from the training set.

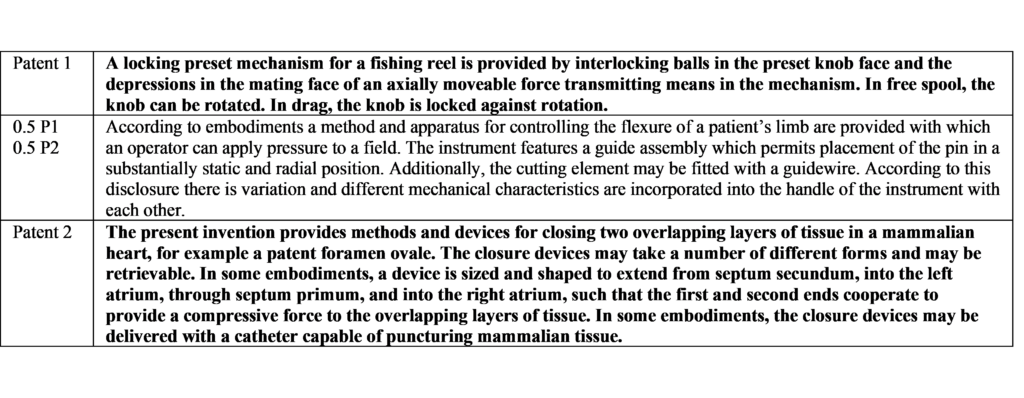

The first approach was “Concept Fusion”, where two patents are selected and interpolations formed between their document latent vectors.

Secondly, we used a “Latent White Space” approach, whereby random locations are selected from the vicinity of the mean position of a subset of relevant patents.

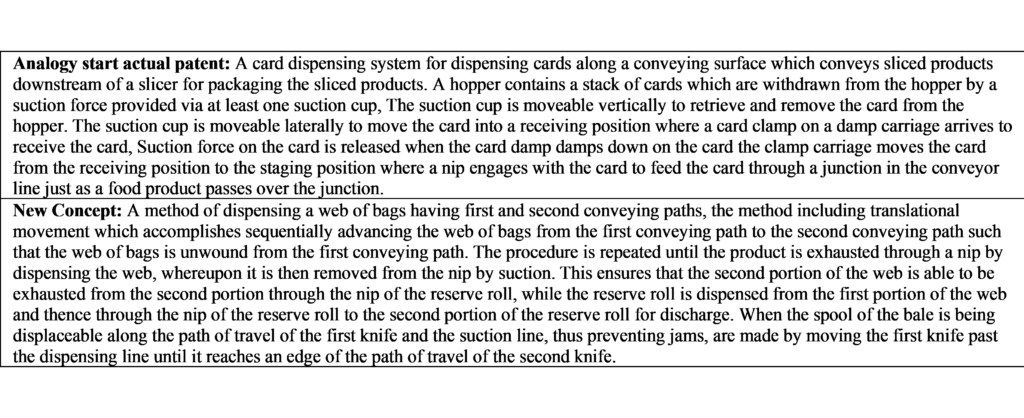

The final approach that we tested was “Citation Analogy”. This involved findingtwo similar patents. The position difference between the first patent and the positions of its forward citations are ‘moved’ to the other patent and new possible concept locations found.

So, what were our findings? We trained a document latent space on 50,000 patent abstracts and explored it to find new concepts and the approaches that we tested had various positive and negative aspects. The short-term conclusion is that much larger latent spaces need to be trained and a means of judging the quality of the generated concepts developed. All three approaches show promise and are just some of numerous routes that we are researching to help Iprova to achieve its ultimate goal of enabling everyone to invent.

Stay tuned for more news on latent spaces and the other research we are conducting. However, if you would like to explore this topic in more detail, then Nick Walker, our Chief Scientific Officer, will be presenting a more in-depth analysis of our latent space research at the AIAI conference in Crete this June. Please contact us if you have any specific queries or questions.

More from our feed

Iprova unveils high-performance data-driven invention software release

Read more

Clarivate Partners with Iprova to Streamline the Invention Creation and Submission Process

Read more

Everyone Is a Power User Now

Read more